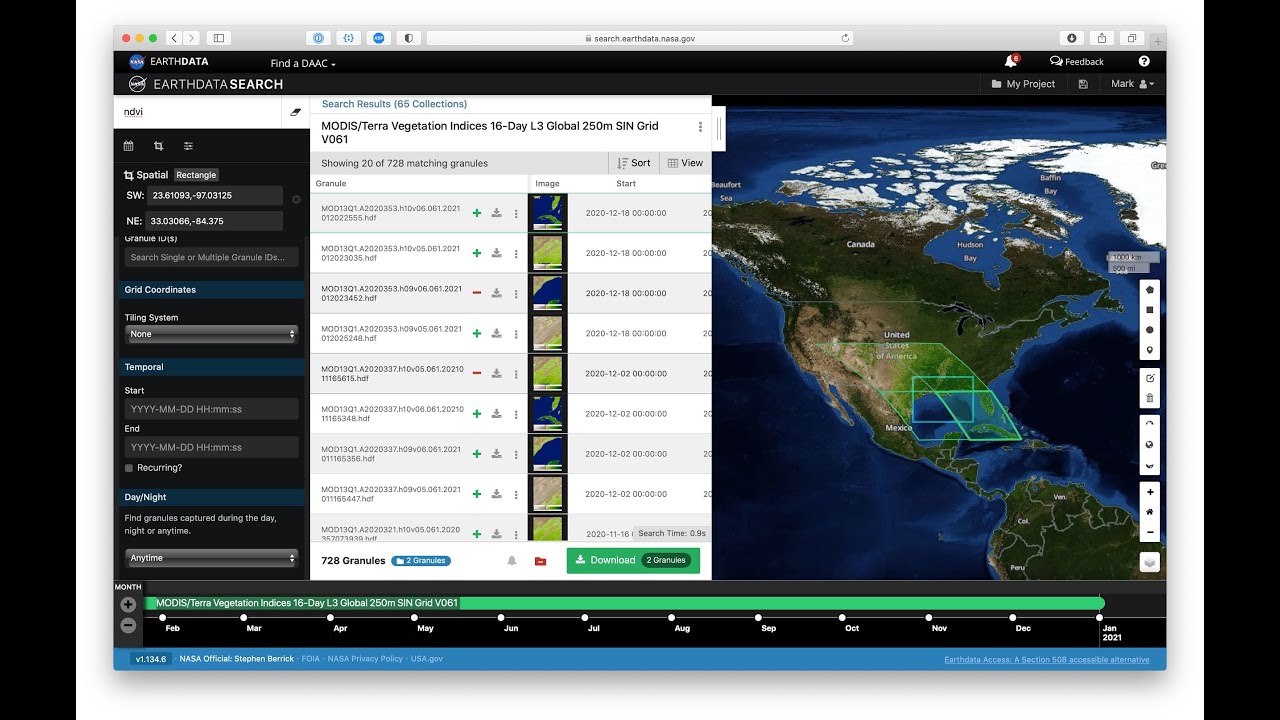

GHRC Search Portal

The GHRC Search Portal is powered by Earthdata Search and provides access to only GHRC data. This search portal was made to be user friendly, concise, and easy to use. A user is delivered to the GHRC Search Portal interface by selecting Find Data (GHRC Search Portal) from the FIND DATA dropdown in the menu bar or by going to the following link:

Link to GHRC Search Portal (https://search.earthdata.nasa.gov/portal/ghrc/search)

GHRC Search Portal Help (https://ghrc.nsstc.nasa.gov/home/ghrc-docs/search-portal-help)

Earthdata Search

Earthdata Search provides the only means for data discovery, filtering, visualization, and access across all of NASA’s Earth science data holdings. It allows you to search by any topic, collection, or place name. Using Global Imagery Browse Services (GIBS), Earthdata Search enables high-performance, highly available data visualization when applicable. Learn more about Earthdata Search.

CMR

NASA's Common Metadata Repository (CMR) is a high-performance, high-quality, continuously evolving metadata system that catalogs all data and service metadata records for NASA's Earth Observing System Data and Information System (EOSDIS) system and will be the authoritative management system for all EOSDIS metadata. These metadata records are registered, modified, discovered, and accessed through programmatic interfaces leveraging standard protocols and APIs. Learn more about CMR.

General Data Access

Transition to the cloud

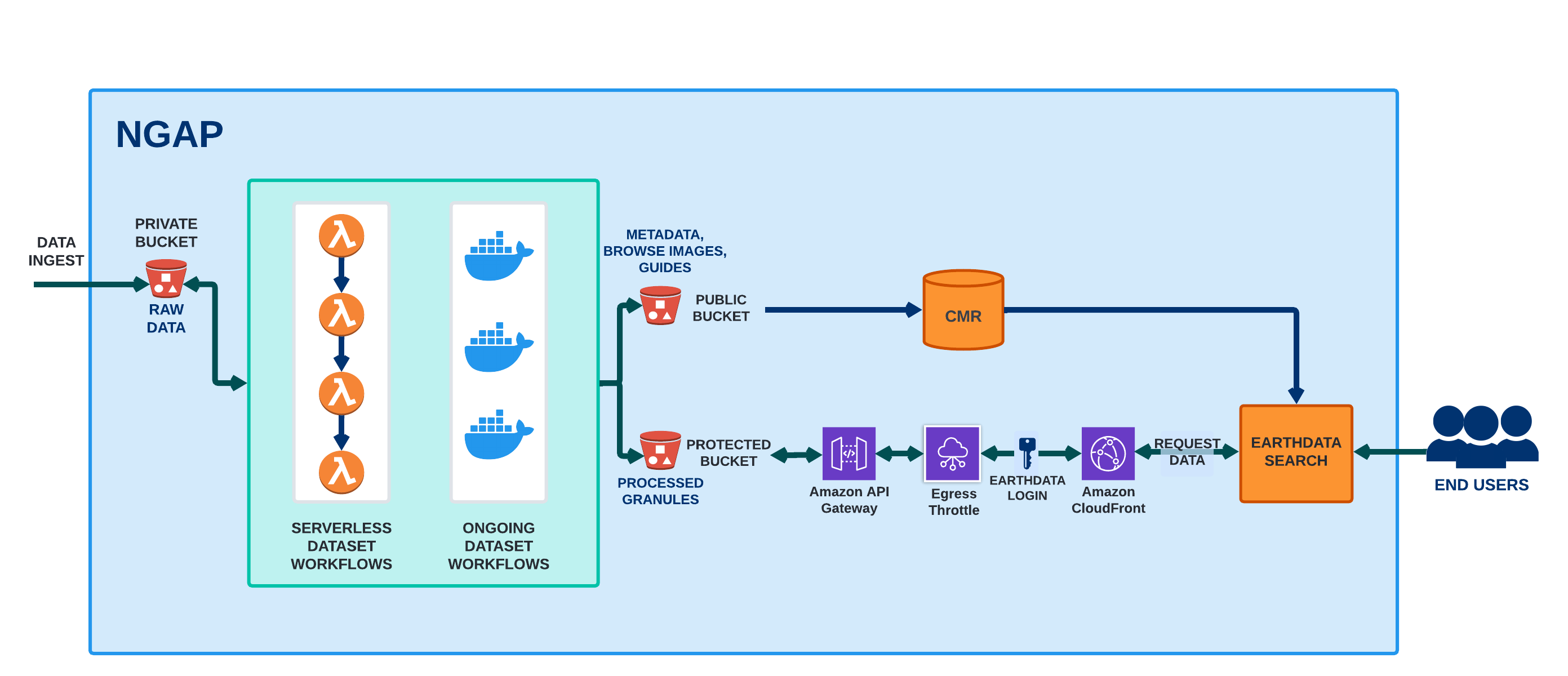

Architecture Diagram

The GHRC DAAC is the first NASA data archive center to transition its holdings to the cloud. GHRC DAAC has served as the cloud pathfinder for all of the DAACs to ease the transition for other DAACs. As part of this effort, the GHRC DAAC is leading activities to develop the procedures necessary to effectively carry out the DAAC’s mission in the cloud. GHRC has migrated its on-premise legacy workflows to the NASA Next Generation Application Platform (NGAP), which runs on the Amazon Web Services (AWS). GHRC is taking various steps to run a robust cloud-based data operation. We will use a common data ingest and archive framework called Cumulus, using continuous integration and deployment pipeline provided by NASA Earthdata infrastructure, and various steps to build a verifiable trustworthy cloud infrastructure.

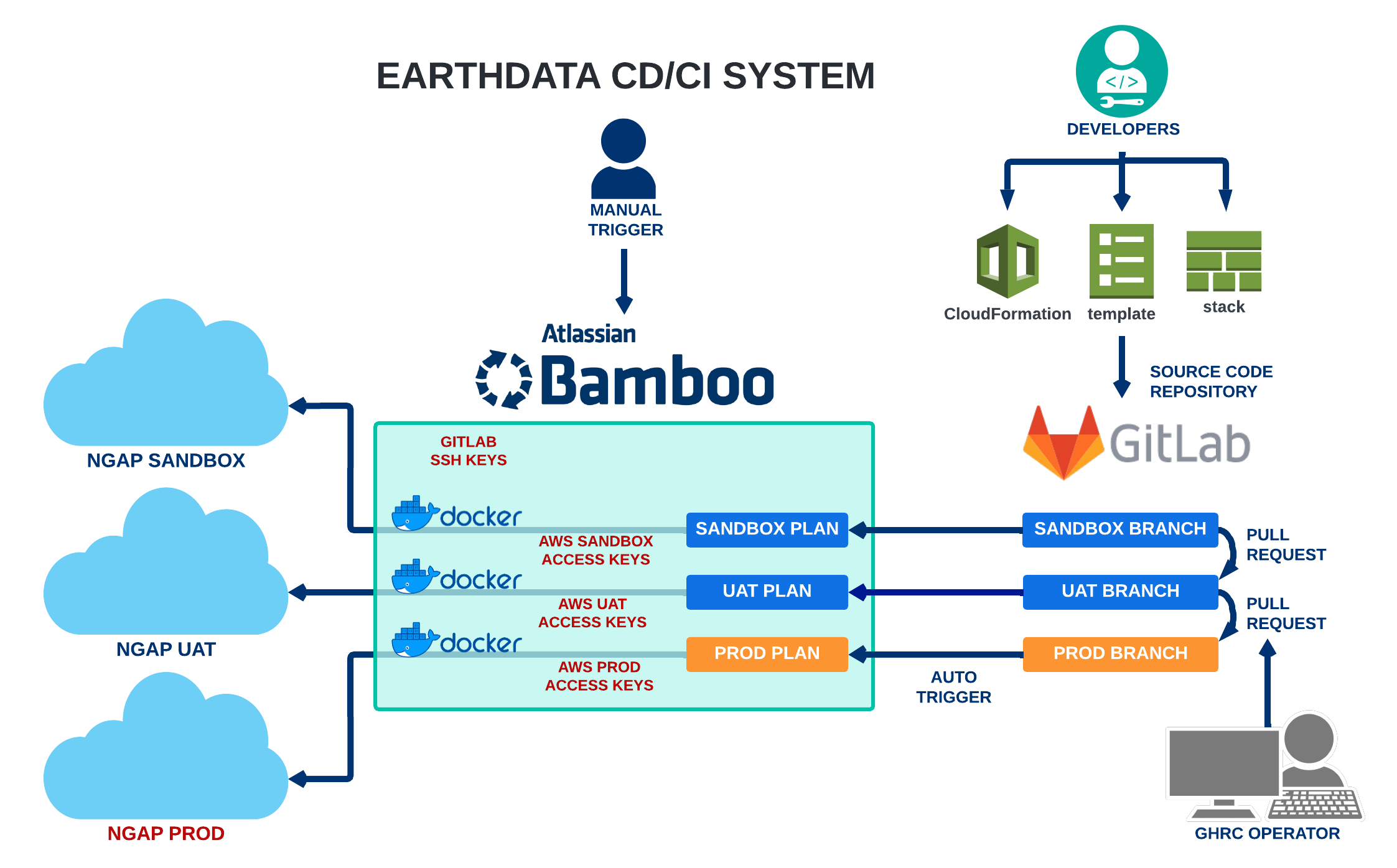

Continuous Deployment and Integration

Bamboo plans are created by Earthdata. Each plan is associated with the repository (GitLab) which has a custom Docker Image. The Task is automatically executed to deploy the resources based on the Docker Image. GitLab SSH keys need to be setup for Bamboo Plans.

Tools

FCX

The Field Campaign eXplorer (FCX) addresses challenges inherent with utilizing datasets to improve data management and discoverability to enable scientists to rapidly collect, visualize, and analyze these data. This advanced tool reduces the effort involved in discovering data, is designed for event-based research, allows for seamless movement between data visualization, discovery and acquisition, and enhances the user experience by improving the usability of heterogeneous data. New proposed work aims to incorporate along-track subsetting, time and space matching between instruments, as well as cloud-native analysis tools.

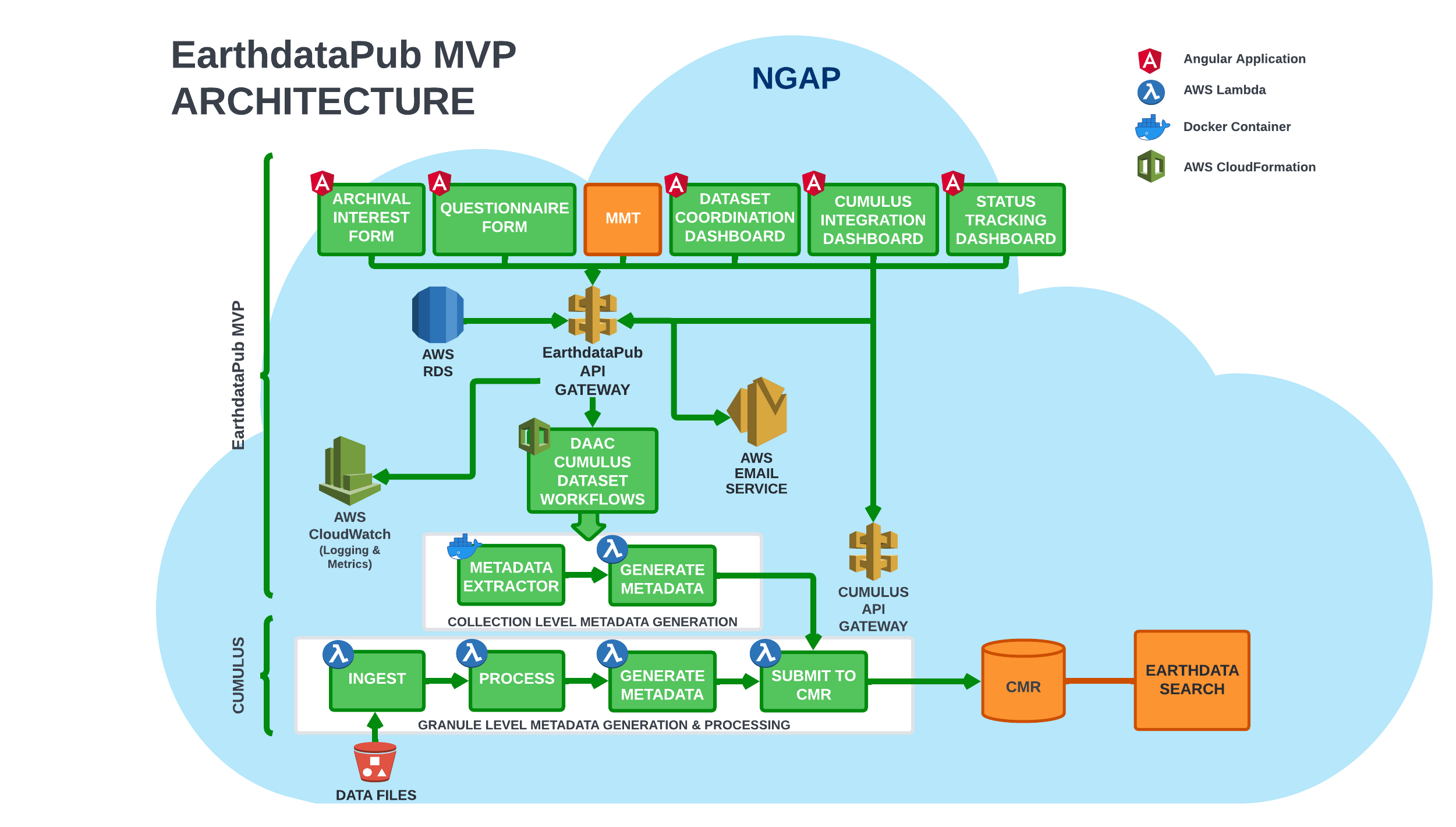

Earthdata Pub

GHRC has developed the Earthdata Publication Minimum Viable Product (Earthdata Pub MVP, or EDP MVP) -- a cloud-hosted solution that works with both cloud and on-premise systems and implements the communications and exchange requirements generated by the Earthdata Pub information architecture team.

-

Features

EDP MVP will provide following features and components:- Data Provider Forms

- Metadata Editor

- Data Management Team Coordination Dashboards & Views

- Integration with Cumulus

- Email Communication & Notifications

- API Layer

- Logging and Metrics Dashboard

-

Users & Roles Management

-

Following is the short description of our plan for each component within EDP MVP:

-

Data Provider Forms

- DAPPeR provides two forms for data providers to fill out— Archival Interest Form and Dataset Questionnaire Form. We will be implementing the current set of questions from these forms in EDP MVP. When the common set of questions and vocabulary is developed by EarthdataPub Info sub-group, we will then incorporate those in these forms.

- The DAPPeR forms are currently implemented in Drupal 8 and we plan to reimplement them using Google’s Angular front-end web development framework.

-

Metadata Editor

- DAPPeR has its own metadata editor developed in Drupal 8. We would like to replace it with MMT as it provides a mature set of functionalities targeting the Common Metadata Repository (CMR) rather than our local catalog.

-

Data Management Team Coordination Dashboards & Views

- DAPPeR provides dataset coordination dashboard and various views to track status, users, and view metadata. These are all developed in Drupal 8. We plan to reimplement these using Google’s Angular framework.

-

Integration with Cumulus

- Cumulus provides a separate dashboard for initiating the granule level data ingest process. Some of the information that is needed to initiate a granule level data ingest is already present at a collection level record in DAPPeR. Our operators and data management team are required to use two completely different interfaces. Information available in DAPPeR must be manually copied into the Cumulus Dashboard. Also, the NetCDF CF metadata generator module that we have been developing as part of FY18 Cloud Migration task allows the data management team to semi-automate the granule metadata generation and granule data ingest directly from DAPPeR.

- We found that by performing these extra steps to setup data ingest workflows in the cloud using Cumulus, our dataset publication rate would decrease significantly. In order to remedy this situation, as part of our FY18 Cloud Migration task, we started work on developing three cloud-based DAPPeR UI interfaces/modules to provide a temporary workaround to assist the data publication process until we have a cloud solution. The modules include:

- Cumulus workflow submission module

- NetCDF CF metadata generator module

- Glacier restore module

- We plan to integrate EDP MVP with Cumulus and its ecosystem to provide a much smoother experience for the data management teams so that we can keep up with our data publication rate. We will incorporate current cloud-based DAPPeR modules into EDP MVP and enhance them to meet the said objective.

-

Email Communication & Notifications

- Currently, DAPPeR doesn’t have a good email notification system; the primary reason being the restriction of running a custom mail server on the NASA MSFC network. We plan to resolve this using a managed email service like Amazon Simple Email Service (SES). Amazon SES gives us a managed secure email server, as well as a set of APIs that can be called from any component running within an application. Using Amazon SES will provide a solution to our current limitations of email notifications.

-

API Layer

- DAPPeR currently interfaces with the GHRC Catalog database using an off-the-shelf package called DreamFactory. DreamFactory provides a set of APIs to abstract away database calls. We plan to replace DreamFactory with Amazon Gateway and a set of Amazon Lambda functions.

-

Logging and Metrics Dashboard

- Currently, DAPPeR has an extremely basic logging capability and no metrics viewing capability. We plan to use the Amazon CloudWatch service which provides a set of APIs that can be called from any component running within your application. Amazon CloudWatch also provides metrics dashboard and by using this service we would reduce the development time of this needed feature. Cumulus software also uses Amazon CloudWatch for storing its logs and plans to do the same for EDP MVP.

-

Users & Roles Management

- One of the good things about Drupal is it provides great users and role management functionality. But, we plan to integrate EDP MVP with a user authorization system that meets NASA requirements.

-

Data Provider Forms

Bulk Downloader

Tutorials

| User Goal | Cloud Tutorial or Tool |

Find Collections |

GHRC Search Portal Help |

Find Granules |

|

Bulk Downloader |

Git Repository |